目录

官网文档:

https://nvidia.github.io/TensorRT-LLM/quick-start-guide.html#launch-the-docker

https://github.com/triton-inference-server/tensorrtllm_backend

tensorrtllm_backend

bash展开代码git clone https://github.com/triton-inference-server/tensorrtllm_backend.git

cd tensorrtllm_backend

git submodule update --init --recursive

git lfs install

git lfs pull

挺大:2.5G tensorrtllm_backend/

启动容器

启动容器

bash展开代码docker run --rm -it --net host --shm-size=4g \

--ulimit memlock=-1 --ulimit stack=67108864 --gpus device=1 \

-v /data/xiedong/tensorrtllm_backend:/tensorrtllm_backend \

-v /data/xiedong/engines:/engines \

-v /data/xiedong/Qwen2.5-14B-Instruct/:/Qwen2.5-14B-Instruct/ \

nvcr.io/nvidia/tritonserver:24.11-trtllm-python-py3

这里选镜像:

https://catalog.ngc.nvidia.com/orgs/nvidia/containers/tritonserver/tags

准备 TensorRT-LLM 引擎文件

普通模型

bash展开代码cd /tensorrtllm_backend/tensorrt_llm/examples/qwen

# Convert weights from HF Tranformers to TensorRT-LLM checkpoint

python3 convert_checkpoint.py --model_dir /Qwen2.5-14B-Instruct \

--dtype float16 \

--tp_size 1 \

--output_dir ./tllm_checkpoint_1gpu_bf16

# Build TensorRT engines

trtllm-build --checkpoint_dir ./tllm_checkpoint_1gpu_bf16 \

--gemm_plugin float16 \

--output_dir /engines/gpt/fp16/1-gpu

想做量化

如果想要量化怎么搞?首先要看看TensorRT-LLM 支持的量化精度,每个模型被支持的量化精度不尽相同:

https://nvidia.github.io/TensorRT-LLM/reference/precision.html

https://github.com/NVIDIA/TensorRT-LLM/tree/rel/examples/gpt

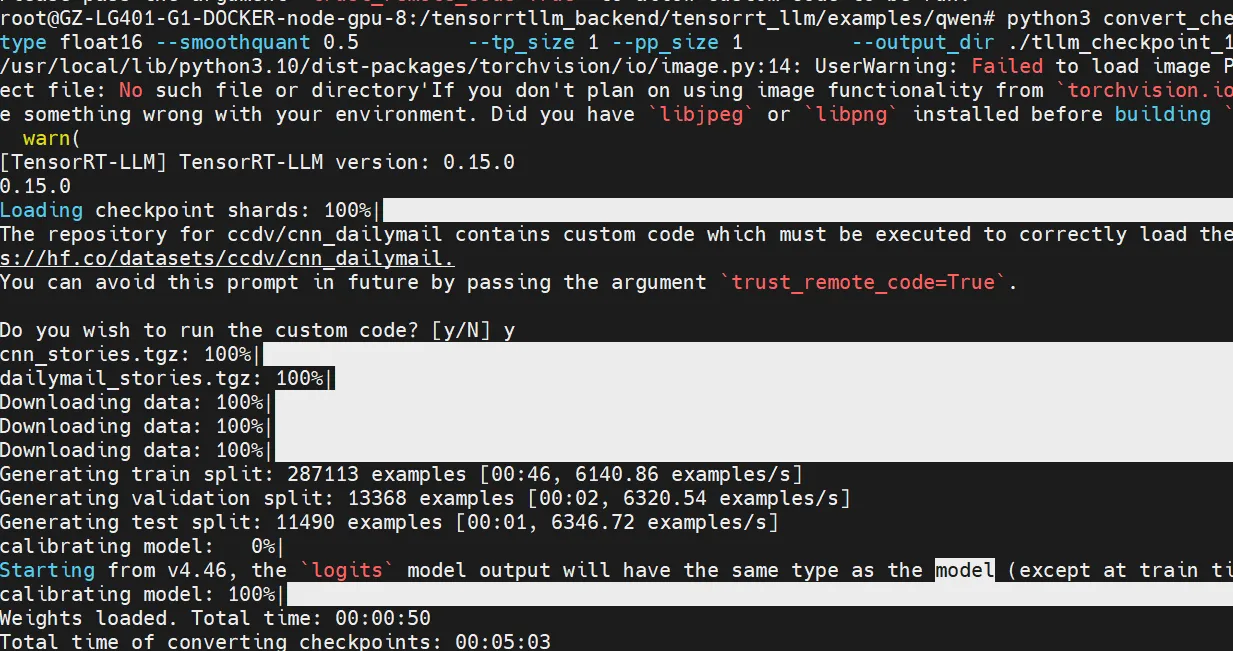

convert_checkpoint.py 本身提供了一些量化方式,基本是够用了。详细可看本身的--help。这个行为还自己下载量化矫正数据集去量化模型。下载的是这个数据集: https://huggingface.co/datasets/ccdv/cnn_dailymail 。

准备 TensorRT-LLM 引擎文件,并且是量化过的:

bash展开代码cd /tensorrtllm_backend/tensorrt_llm/examples/qwen

# Convert weights from HF Tranformers to TensorRT-LLM checkpoint 会自己下载量化校正数据集

python3 convert_checkpoint.py --model_dir /Qwen2.5-14B-Instruct \

--dtype float16 --smoothquant 0.5 \

--tp_size 1 --pp_size 1 \

--output_dir ./tllm_checkpoint_1gpu_int8-sq

# Build TensorRT engines

trtllm-build --checkpoint_dir ./tllm_checkpoint_1gpu_int8-sq \

--output_dir /engines/gpt/int8-sq/1-gpu

engines的不一样之处

https://github.com/triton-inference-server/tensorrtllm_backend/blob/main/docs/model_config.md

- max_batch_size 定义引擎可以处理的最大请求数。

- max_seq_len 定义单次请求的最大序列长度。

- max_num_tokens 定义每批删除填充后批量输入标记的最大数量。

--max_num_tokens MAX_NUM_TOKENS

is removed in each batch It equals to max_batch_size * max_beam_width by default, set this value as close

bash展开代码cd /tensorrtllm_backend/tensorrt_llm/examples/qwen

# 上一小节的量化

python3 convert_checkpoint.py --model_dir /Qwen2.5-14B-Instruct \

--dtype float16 --smoothquant 0.5 \

--tp_size 1 --pp_size 1 \

--output_dir ./tllm_checkpoint_1gpu_int8-sq

# Build TensorRT engines

trtllm-build --checkpoint_dir ./tllm_checkpoint_1gpu_int8-sq \

--output_dir /engines/gpt/int8-sq-haha/1-gpu --max_batch_size 1 --max_seq_len 512

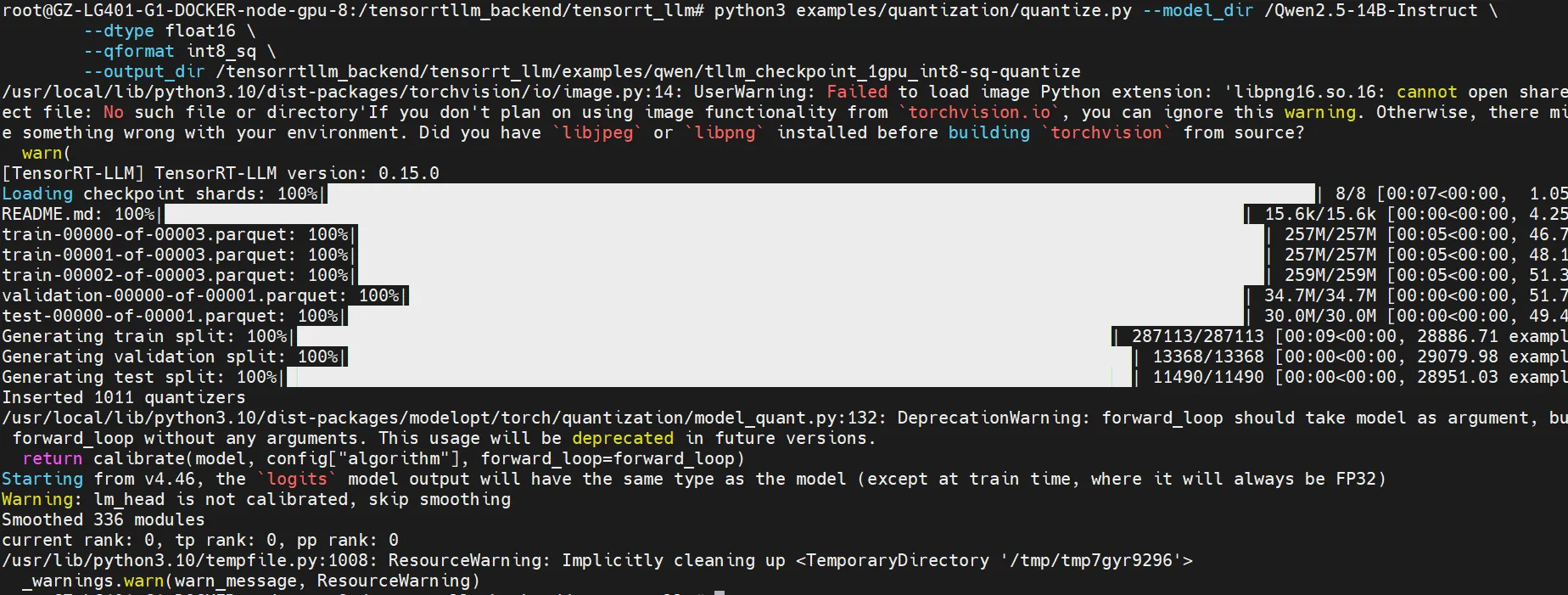

其他量化脚本-不推荐

也可以用另外一个脚本去量化:

bash展开代码cd /tensorrtllm_backend/tensorrt_llm

python3 examples/quantization/quantize.py --model_dir /Qwen2.5-14B-Instruct \

--dtype float16 \

--qformat int8_sq \

--output_dir /tensorrtllm_backend/tensorrt_llm/examples/qwen/tllm_checkpoint_1gpu_int8-sq-quantize

cd /tensorrtllm_backend/tensorrt_llm/examples/qwen

# Build TensorRT engines

trtllm-build --checkpoint_dir ./tllm_checkpoint_1gpu_int8-sq \

--output_dir /engines/gpt/int8-sq-quantize/1-gpu

quantize.py 参数之一:

-

qformat:指定应用于检查点的量化算法。

-

fp8:权重在张量维度上量化为 FP8。激活范围在张量维度上校准。

-

int8_sq:权重被平滑处理并在通道维度上量化为 INT8。激活范围在张量维度上校准。

-

int4_awq:权重被重新缩放并按块量化为 INT4。块大小由

awq_block_size指定。 -

w4a8_awq:权重被重新缩放并按块量化为 INT4。块大小由

awq_block_size指定。激活范围在张量维度上校准。 -

int8_wo:实际上对权重没有进行任何操作。当 TRTLLM 构建引擎时,权重在通道维度上量化为 INT8。

-

int4_wo:与 int8_wo 相同,但在 INT4 中。

-

full_prec:不进行量化。

-

准备 Triton 模型库

Triton 推理服务器提供来自启动服务器时指定的一个或多个模型存储库的模型。在 Triton 运行时,可以按照模型管理中的说明修改所提供服务的模型。

这些存储库路径是在使用 --model-repository 选项启动 Triton 时指定的。可以多次指定 --model-repository 选项以包含来自多个存储库的模型。组成模型存储库的目录和文件必须遵循所需的布局。假设存储库路径指定如下。

https://github.com/triton-inference-server/server/blob/main/docs/user_guide/model_repository.md

bash展开代码mkdir /engines/triton_model_repo/

cp -r /tensorrtllm_backend/all_models/inflight_batcher_llm/* /engines/triton_model_repo/

修改 Triton 模型配置

填写配置参数,执行这些指令:

bash展开代码ENGINE_DIR=/engines/gpt/int8-sq-haha/1-gpu

TOKENIZER_DIR=/Qwen2.5-14B-Instruct # HF模型所在

MODEL_FOLDER=/engines/triton_model_repo

TRITON_MAX_BATCH_SIZE=1 # 可以多给点,我这里为了测试下显存,给1

INSTANCE_COUNT=1

MAX_QUEUE_DELAY_MS=0

MAX_QUEUE_SIZE=1

FILL_TEMPLATE_SCRIPT=/tensorrtllm_backend/tools/fill_template.py

DECOUPLED_MODE=false

python3 ${FILL_TEMPLATE_SCRIPT} -i ${MODEL_FOLDER}/ensemble/config.pbtxt triton_max_batch_size:${TRITON_MAX_BATCH_SIZE}

python3 ${FILL_TEMPLATE_SCRIPT} -i ${MODEL_FOLDER}/preprocessing/config.pbtxt tokenizer_dir:${TOKENIZER_DIR},triton_max_batch_size:${TRITON_MAX_BATCH_SIZE},preprocessing_instance_count:${INSTANCE_COUNT}

python3 ${FILL_TEMPLATE_SCRIPT} -i ${MODEL_FOLDER}/tensorrt_llm/config.pbtxt triton_backend:tensorrtllm,triton_max_batch_size:${TRITON_MAX_BATCH_SIZE},decoupled_mode:${DECOUPLED_MODE},engine_dir:${ENGINE_DIR},max_queue_delay_microseconds:${MAX_QUEUE_DELAY_MS},batching_strategy:inflight_fused_batching,max_queue_size:${MAX_QUEUE_SIZE}

python3 ${FILL_TEMPLATE_SCRIPT} -i ${MODEL_FOLDER}/postprocessing/config.pbtxt tokenizer_dir:${TOKENIZER_DIR},triton_max_batch_size:${TRITON_MAX_BATCH_SIZE},postprocessing_instance_count:${INSTANCE_COUNT},max_queue_size:${MAX_QUEUE_SIZE}

python3 ${FILL_TEMPLATE_SCRIPT} -i ${MODEL_FOLDER}/tensorrt_llm_bls/config.pbtxt triton_max_batch_size:${TRITON_MAX_BATCH_SIZE},decoupled_mode:${DECOUPLED_MODE},bls_instance_count:${INSTANCE_COUNT}

填充完之后,各个文件夹下面是有一些配置文件的:

bash展开代码root@GZ-LG401-G1-DOCKER-node-gpu-8:triton_model_repo# tree -L 2

.

|-- ensemble

| |-- 1

| `-- config.pbtxt

|-- postprocessing

| |-- 1

| `-- config.pbtxt

|-- preprocessing

| |-- 1

| `-- config.pbtxt

|-- tensorrt_llm

| |-- 1

| `-- config.pbtxt

`-- tensorrt_llm_bls

|-- 1

`-- config.pbtxt

10 directories, 5 files

使用 TensorRT-LLM 模型启动 Triton 服务器

bash展开代码# 'world_size' is the number of GPUs you want to use for serving. This should

# be aligned with the number of GPUs used to build the TensorRT-LLM engine.

python3 /tensorrtllm_backend/scripts/launch_triton_server.py --world_size=1 --model_repo=${MODEL_FOLDER}

这个日志表示启动成功:

bash展开代码I1122 06:29:55.578920 145 grpc_server.cc:2558] "Started GRPCInferenceService at 0.0.0.0:8001"

I1122 06:29:55.579121 145 http_server.cc:4713] "Started HTTPService at 0.0.0.0:8000"

I1122 06:29:55.620023 145 http_server.cc:362] "Started Metrics Service at 0.0.0.0:8002"

要停止容器内的 Triton 服务器,请运行:

bash展开代码pkill tritonserver

- text_input:输入文本以生成响应

- max_tokens:请求的输出 token 数量

- bad_words:脏话列表(可以为空)

- stop_words:停用词列表(可以为空)

bash展开代码curl -X POST http://101.136.8.66:8000/v2/models/ensemble/generate -d '{"text_input": "<|im_start|>system<|im_end|>\n<|im_start|>user\n你是什么模型?\n<|im_end|>\n<|im_start|>assistant", "max_tokens": 200, "bad_words": "", "stop_words": ""}'

返回:

bash展开代码# curl -X POST http://101.136.8.66:8000/v2/models/ensemble/generate -d '{"text_input": "<|im_start|>system<|im_end|>\n<|im_start|>user\n你是什么模型?\n<|im_end|>\n<|im_start|>assistant", "max_tokens": 200, "bad_words": "", "stop_words": ""}'

{"model_name":"ensemble","model_version":"1","sequence_end":false,"sequence_id":0,"sequence_index":0,"sequence_start":false,"text_output":"system\nuser\n你是什么模型?\n\nassistant 我是一个语言模型,我的功能是生成文本,与用户进行自然语言交流。请问有什么可以帮您的?"}

客户端访问

https://github.com/triton-inference-server/tensorrtllm_backend/tree/main/inflight_batcher_llm/client

安装环境

bash展开代码pip3 install tritonclient[all] pip install transformers

访问例子:

bash展开代码python3 /data/xiedong/tensorrtllm_backend/inflight_batcher_llm/client/inflight_batcher_llm_client.py --request-output-len 200 --tokenizer-dir "/data/xiedong/Qwen2.5-14B-Instruct"

bash展开代码python3 /data/xiedong/tensorrtllm_backend/inflight_batcher_llm/client/end_to_end_grpc_client.py \

--url "localhost:8001" --streaming --output-len 100 \

--prompt "你是谁?"

本文作者:Dong

本文链接:

版权声明:本博客所有文章除特别声明外,均采用 CC BY-NC。本作品采用《知识共享署名-非商业性使用 4.0 国际许可协议》进行许可。您可以在非商业用途下自由转载和修改,但必须注明出处并提供原作者链接。 许可协议。转载请注明出处!